The trickle-down theory of economics has been comprehensively disproved by multiple studies in different countries over decades. Why should it work now, in the UK? CC-licensed photo by Ian SaneIan Sane on Flickr.

You can sign up to receive each day’s Start Up post by email. You’ll need to click a confirmation link, so no spam.

A selection of 9 links for you. Use them wisely. I’m @charlesarthur on Twitter. Observations and links welcome.

LinkedIn ran social experiments on 20 million users over five years • The New York Times

Natasha Singer:

»

In experiments conducted around the world from 2015 to 2019, Linkedin randomly varied the proportion of weak and strong contacts suggested by its “People You May Know” algorithm — the company’s automated system for recommending new connections to its users. The tests were detailed in a study published this month in the journal Science and co-authored by researchers at LinkedIn, M.I.T., Stanford and Harvard Business School.

LinkedIn’s algorithmic experiments may come as a surprise to millions of people because the company did not inform users that the tests were underway.

Tech giants like LinkedIn, the world’s largest professional network, routinely run large-scale experiments in which they try out different versions of app features, web designs and algorithms on different people. The longstanding practice, called A/B testing, is intended to improve consumers’ experiences and keep them engaged, which helps the companies make money through premium membership fees or advertising. Users often have no idea that companies are running the tests on them.

But the changes made by LinkedIn are indicative of how such tweaks to widely used algorithms can become social engineering experiments with potentially life-altering consequences for many people. Experts who study the societal impacts of computing said conducting long, large-scale experiments on people that could affect their job prospects, in ways that are invisible to them, raised questions about industry transparency and research oversight.

“The findings suggest that some users had better access to job opportunities or a meaningful difference in access to job opportunities,” said Michael Zimmer, an associate professor of computer science and the director of the Center for Data, Ethics and Society at Marquette University. “These are the kind of long-term consequences that need to be contemplated when we think of the ethics of engaging in this kind of big data research.”

…The study in Science tested an influential theory in sociology called “the strength of weak ties,” which maintains that people are more likely to gain employment and other opportunities through arms-length acquaintances than through close friends.

«

Exactly like the Facebook “study” in 2014, when more than half a million users had their News Feeds manipulated to see if positive emotions begat positive posts, and negative ones begat gloomier ones. (They do.) Like LinkedIn now, Facebook claimed then it was covered by its Ts and Cs. (Very questionable claim, both times.) Social media sites seem unable to let go of the power they have to do seemingly trivial things like this. They couldn’t recruit people properly into a double-blind trial? No, of course not.

unique link to this extract

How the New York Times A/B tests its headlines • TJCX

Tom CJ:

»

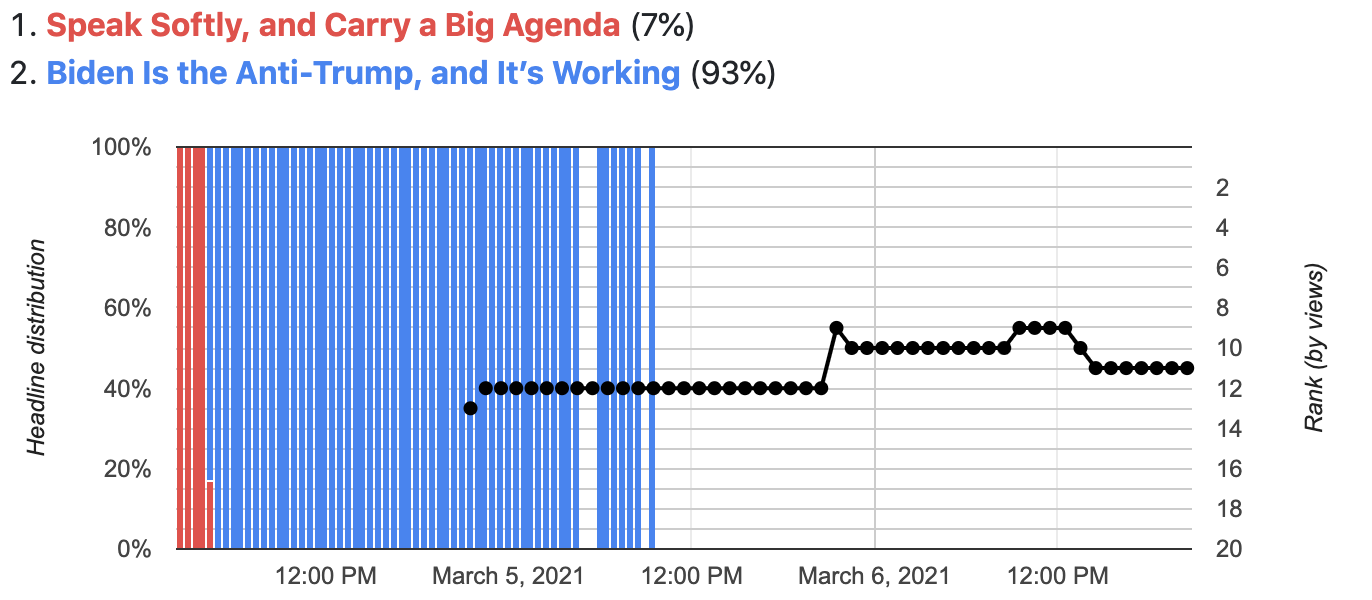

most headline swaps are clearly A/B tests looking for more clicks. Here’s an article about Biden’s governing style, with a pretty dramatic headline switch:

The only reason to make this kind of change is if you’re trying to boost engagement. And it worked! This article broke into the “most viewed” list a few hours after the headline swap (which supports my theory that liberals love reading about Trump).

Note: The above chart (and others in this post) make it look like a headline switches completely from one to another, without any actual A/B testing. This is just an artifact of how I’ve grouped the columns—each bar represents half an hour, but within that half my scraper sees the headline change back and forth many times, despite the colors being grouped together.

But not all A/B tests have such success. Here’s an A/B test that definitely failed (you might have to squint to see the tiny blue smidge on a smaller screen):

I hope this failure didn’t discourage the kooky NYT editor behind “Jumping Jehoshaphat!” The NYT could definitely use more Bugs Bunny-isms.

«

The difference with this A/B testing is that it doesn’t change your job prospects.

unique link to this extract

Twitter Jan. 6 whistleblower Anika Collier Navaroli speaks to The Washington Post • The Washington Post

Drew Harwell:

»

Navaroli, a former policy official on the team designing Twitter’s content-moderation rules, testified to the committee that the ban came only after Twitter executives had for months rebuffed her calls for stronger action against Trump’s account. Only after the Capitol riot, which left five dead and hundreds injured, did Twitter move to close his 88 million follower account.

Tech companies traditionally require employees to sign broad nondisclosure agreements that restrict them from speaking about their work. Navaroli was not able to speak in detail about her time at Twitter, said her attorney, Alexis Ronickher, with the Washington law firm Katz Banks Kumin, who joined in on the interview.

But Navaroli told The Post that she has sat for multiple interviews with congressional investigators to candidly discuss the company’s actions. A comprehensive report that could include full transcripts of her revelations is expected to be released this year.

…“Regulating speech is hard, and we need to come in with more nuanced ideas and proposals. There’s got to be a balance of free expression and safety,” she said. “But we also have to ask: whose speech are we protecting at the expense of whose safety? And whose safety are we protecting at the expense of whose speech?”

…Navaroli left Twitter last year and is now researching the impact of hate-speech moderation through a fellowship at Stanford University. She said she hopes the testimony she gave the committee will help inspire more Silicon Valley insiders to speak publicly about their companies’ failures to fight viral misinformation and extremist speech.

«

Forget trickle down, what the UK needs is middle-out economics • The Guardian

Eric Beinhocker and Nick Hanauer:

»

Jared Bernstein, a member of Joe Biden’s council of economic advisers, summarised the evidence against trickle-down economics in a presentation to the joint economic committee of Congress several years ago. Bernstein noted that, if the trickle-down theory was correct, we would expect to see that, when tax rates go down, growth goes up and vice versa. But using data stretching from 1947 to 2015, Bernstein showed in no case was that true.

Tax cuts not only failed to stimulate gross domestic product growth, they also failed to stimulate employment growth, wage growth, investment growth or productivity growth. And there were plenty of periods when taxes were high, particularly for high earners, and so too was growth.

Not only has the trickle-down effect failed in the US but it has failed in the UK and 16 other developed countries. A study by researchers at the London School of Economics showed that over the past 50 years, the impact of tax cuts on growth across all these countries is “statistically indistinguishable from zero”.

Researchers have, however, found one big impact of trickle-down policies: they redistribute income from working people to the wealthy – they lead to trickle-up. A study by the Rand corporation that we were involved with shows decades of trickle-down policies in the US redistributed about $50tn in wage growth from the bottom 90% of earners to the top 1%.

It turns out that if you massively cut taxes for rich people, and at the same time suppress worker wages and reduce worker power (in “deregulation” and “market efficiency”), it’s really good for rich people.

«

I guess that Truss (and Kwarteng) will say that all those examples in all those years were because they didn’t implement trickle-down economics properly. (Professor Eric Beinhocker is executive director of the Institute for New Economic Thinking at the Oxford Martin School, Oxford University, and Nick Hanauer is the founder of Civic Ventures in Seattle.)

unique link to this extract

Strategy, logistics and morale: why the fundamentals of war haven’t changed • Sunday Telegraph

Mike Martin, of the Royal United Services Institute, is a military analyst:

»

It is because of these three factors – strategy, logistics and morale – that Russia will lose the war. Not because Ukraine had some drones that it bought from Amazon, and not because Putin has rashly called up some reservists to the front line. Unfortunately for them, without, you guessed it, strategy, logistics or morale, they will go the way of those who have gone before them: into the Ukrainian meat grinder.

There is a deeper question about why we constantly seek to reimagine war – to say that it has changed, or that it is something that it is not. It is a very difficult question to answer, but as humans we like to imagine that new technology will help us win wars, or will help us avoid wars. Perhaps we want to avoid the brutally chaotic nature of war, or ignore the reality that it is a fight to the death. Perhaps it is something particular to democracies, whose populations like to imagine that technology can help us fight wars at arm’s length, so that they will not touch our lives.

Paradoxically, this thinking makes us more likely to fight wars, rather than less.

«

Martin thinks we are seeing the beginning of the end of the war, and that Putin won’t use a nuclear weapon there (because the risk of escalation and thus assured death is too high). Here’s hoping the military expert is calling it right.

unique link to this extract

How AI helped Darth Vader’s voice remain young • Vanity Fair

Anthony Breznican:

»

Belyaev is a 29-year-old synthetic-speech artist at the Ukrainian start-up Respeecher, which uses archival recordings and a proprietary A.I. algorithm to create new dialogue with the voices of performers from long ago. The company worked with Lucasfilm to generate the voice of a young Luke Skywalker for Disney+’s The Book of Boba Fett, and the recent Obi-Wan Kenobi series tasked them with making Darth Vader sound like James Earl Jones’s dark side villain from 45 years ago, now that Jones’s voice has altered with age and he has stepped back from the role.

…What Respeecher could do better than anyone was recreate the unforgettably menacing way that Jones, now 91, sounded half a lifetime ago. Wood estimates that he’s recorded the actor at least a dozen times over the decades, the last time being a brief line of dialogue in 2019’s The Rise of Skywalker. “He had mentioned he was looking into winding down this particular character,” says Wood. “So how do we move forward?”

When he ultimately presented Jones with Respeecher’s work, the actor signed off on using his archival voice recordings to keep Vader alive and vital even by artificial means—appropriate, perhaps, for a character who is half mechanical. Jones is credited for guiding the performance on Obi-Wan Kenobi, and Wood describes his contribution as “a benevolent godfather.” They inform the actor about their plans for Vader and heed his advice on how to stay on the right course.

«

Robin Williams specified in his will that he didn’t want his voice to be recreated in any way. James Earl Jones is clearly on the other side of that position. In which case Respeecher is happy to help out.

unique link to this extract

Introducing Whisper • OpenAI

»

Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. We show that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English. We are open-sourcing models and inference code to serve as a foundation for building useful applications and for further research on robust speech processing.

The Whisper architecture is a simple end-to-end approach, implemented as an encoder-decoder Transformer. Input audio is split into 30-second chunks, converted into a log-Mel spectrogram, and then passed into an encoder. A decoder is trained to predict the corresponding text caption, intermixed with special tokens that direct the single model to perform tasks such as language identification, phrase-level timestamps, multilingual speech transcription, and to-English speech translation.

«

Simple! Early trials (by others) suggest that it’s very good, even on mumbled speech, that it capitalises names, spells correctly. Not all of the training data is English, either, which offers the prospect of multilingual translation. You can install it on your own local machine if this Github page makes sense to you.

unique link to this extract

How to create your own sound recognition alarms (iOS 16) • iPhone Life

Kenya Smith:

»

Sound Recognition is a feature that was added to iPhones to help users with disabilities be aware of certain sounds such as sirens, smoke detectors, and breaking glass. When Sound Recognition was first introduced, you could set up alerts for pre-programmed options. Now, the new iOS 16 will allow you to create custom sound recognition alerts, which is helpful if you have medical devices or appliances that have unique electronic jingles. Let’s learn how to create your own Sound Recognition alerts.

«

This new feature is found in the Accessibility function, and does include the proviso that it “should not be relied upon.. where you may be harmed or injured, in high-risk or emergency situations, or for navigation.”

But what I don’t quite get is that this feature, which helps people with disabilities, is that its response to a sound is to… play a sound. Wouldn’t it make sense to flash the screen or display a modal dialog or something similar that doesn’t rely on sound? (For some reason this whole thing puts me in mind of this very old sketch.)

unique link to this extract

Crypto darling Helium promised a ‘people’s network.’ Instead, its executives got rich • Forbes

Sarah Emerson:

»

A review of hundreds of leaked internal documents, transaction data and interviews with five former Helium employees suggest that as Helium insiders touted the democratized spirit of their “People’s Network,” they quietly amassed a majority of the tokens earned at the project’s start, hoarding much of the wealth generated in its earliest and most lucrative days.

Forbes identified 30 digital wallets that appear to be connected to Helium employees, their friends and family and early investors. This group of wallets mined 3.5 million HNT — almost half of all Helium tokens mined within the first three months of the network’s launch in August 2019, according to a Forbes analysis that was confirmed by blockchain forensics firm Certik. Within six months, more than a quarter of all HNT had been mined by insiders — valued at roughly $250m when the price of Helium peaked last year. Even after the crypto price crashed, the tokens are still worth $21m today.

Cryptocurrency companies typically compensate early investors and employees for building their offerings with an allotment of tokens, and disclose these rewards in blog posts or white papers. While Helium and its executives have publicly discussed their incentive plan — a scheme called Helium Security Tokens, or HST, which guarantees about a third of all HNT for insiders — they haven’t previously disclosed the additional windfall taken from Helium’s public token supply, worth millions, that was identified by Forbes.

This means that at a time when Helium rewards per hotspot were at their highest, insiders claimed a majority of tokens, while little more than 30% went to Helium’s community. Each hotspot earned an average 33,000 HNT in August 2019, according to blockchain data; today, each hotspot only earns around 2 HNT per month. Some insiders exploited vulnerabilities known to the company to increase their hauls even more.

«

We’ve heard previously about Helium, which encouraged people to buy its routers and said they would get tokens when random people logged into them – which is just bonkers. Yet Helium received huge amounts of venture capital funding. A pipeline transfer of wealth from the excessively rich, to the easily convinced, to the avaricious.

unique link to this extract

| • Why do social networks drive us a little mad? • Why does angry content seem to dominate what we see? • How much of a role do algorithms play in affecting what we see and do online? • What can we do about it? • Did Facebook have any inkling of what was coming in Myanmar in 2016? Read Social Warming, my latest book, and find answers – and more. |

Errata, corrigenda and ai no corrida: none notified

I could not resist installing whisper on my desktop, and though it is extremely slow in the absence of any kind of dedicated of gaming GPU, it does as well as any commercial solution when I gave it a random voice file of me, with my accent, reading out loud from a Swedish paper. So far three errors in 75 words. and two more where I stumbled. I have not tested it on English yet, but this suggest you could leave it running overnight to do an English interview transaction.

“The difference with this A/B testing is that it doesn’t change your job prospects.”

The moral principle here seems to be if it is important, all must be treated the same unless you have informed consent. What happens if you have a new version of software to release? I believe as a point of logic you need informed consent to test it, you can’t just release it. Otherwise you might hurt people’s job prospects unknowingly.

I get it. Your broader context is social warming is bad, which means social media companies require a more stringent A/B testing burden. Social media is like cigarettes, which needs warning labels and restrictions.

My analogy to social media and the internet in general is the printing press. Yes, we got the 30 years religious wars. That was bad! But the end state is also far better, so really we need to just mitigate the transition pains. And having an end state where you can’t A/B test software is too heavy a hammer. Obviously not something we will agree on. But just wanted to comment that anyone who doesn’t view social media as being as bad as you do (that would be me), will disagree and not find your argument convincing, due to different premises.

No, my point isn’t about social warming. It’s about informed consent. You can think that social media is the best thing ever, but if people don’t know they’re a lab rat, and things that can affect them are done to them, that’s unethical.

The printing press is an invention – once it occurs you can’t live in a world without it, so I don’t think that analogy works. Ditto software: you can choose to install it or not. But on LinkedIn, you couldn’t choose whether or not to participate in the experiment. That’s unethical.

thanks for reply. I understand better now that we have two disagreements. Not just one.

1. A/B tests – your threshold on when you need informed consent is lower than mine

2. harm done by software changes – my belief is amount of harm is small

So if I look at all the apps on my phone homescreen, or think of websites I visit regularly, I don’t believe any of them need informed consent to test changes to their software. For reasons 1 and 2 both. You say NYT doesn’t need informed consent because harm is small, which I agree with. But I feel the same way about LinkedIn changes.

Now that I think about it more, it’s my views which are not mainstream. So again, thanks for reply. For my views see this Alex Tabarrok post:

https://marginalrevolution.com/marginalrevolution/2019/05/why-do-experiments-make-people-uneasy.html