Why mow the actual lawn, when you can play a video game that lets you pretend you’re doing it instead? CC-licensed photo by Tony Alter on Flickr.

You can sign up to receive each day’s Start Up post by email. You’ll need to click a confirmation link, so no spam.

There’s another post coming this week at the Social Warming Substack on Friday at 0845 UK time. Free signup.

A selection of 9 links for you. Stripey. I’m @charlesarthur on Twitter. On Threads: charles_arthur. On Mastodon: https://newsie.social/@charlesarthur. Observations and links welcome.

SCOTUS rejects suit alleging federal government bullied social media into censoring content • Yahoo News

»

The U.S. Supreme Court on Wednesday rejected arguments by Missouri and Louisiana that the federal government violated the First Amendment in its efforts to combat false, misleading and dangerous information online.

In a 6-3 decision written by Justice Amy Coney Barrett, the court held that neither the states nor seven individuals who were co-plaintiffs in the case were able to demonstrate any harm or substantial risk that they will suffer an injury in the future. Therefore, they do not have legal standing to bring a case against the federal government.

Plaintiffs failed to prove that social media platforms acted due to government coercion, Barrett wrote, rather than their own judgment and policies. In fact, she wrote, social media platforms “began to suppress the plaintiffs’ COVID–19 content before the defendants’ challenged communications started.”

Plaintiffs cannot “manufacture standing,” Barrett wrote, “merely by inflicting harm on themselves based on their fears of hypothetical future harm that is not certainly impending.”

The ruling overturns a lower court decision that concluded officials under Presidents Joe Biden and Donald Trump unlawfully coerced social media companies to remove deceptive or inaccurate content out of fears it would fuel vaccine hesitancy or upend elections.

Missouri Attorney General Andrew Bailey, who inherited the lawsuit from his predecessor, has called the federal government’s actions “the biggest violation of the First Amendment in our nation’s history.”

…In his dissent, Justice Samuel Alito wrote that the government’s actions in this case were not ” ham-handed censorship” that the court has routinely rejected, but they were coercive and illegal all the same. “It was blatantly unconstitutional,” he wrote, “and the country may come to regret the court’s failure to say so… If a coercive campaign is carried out with enough sophistication, it may get by. That is not a message this court should send.”

«

The dissent (after p29 of the judgment) makes entertaining reading because it’s so full of nonsense. Alito aligns himself with conspiracy theories and antivax nutters. But if, as he says, social media is an important source of news, shouldn’t the news be truthful?

unique link to this extract

We are losing the battle against election disinformation • The New York Times

Renee DiResta was research director at the Stanford Internet Observatory, a disinformation monitoring project which was shut down recently:

»

The way theories of “the steal” [of the 2020 election] went viral was eerily routine. First, an image or video, such as a photo of a suitcase near a polling place, was posted as evidence of wrongdoing. The poster would tweet the purported evidence, tagging partisan influencers or media accounts with large followings. Those accounts would promote the rumour, often claiming, “Big if true!” Others would join, and the algorithms would push it out to potentially millions more. Partisan media would follow.

If the rumour was found to be false — and it usually was — corrections were rarely made and even then, little noticed. The belief that “the steal” was real led directly to the events of Jan. 6, 2021.

Within a couple of years, the same online rumour mill turned its attention to us — the very researchers who documented it. This spells trouble for the 2024 election.

For us, it started with claims that our work was a plot to censor the right. The first came from a blog related to the Foundation for Freedom Online, the project of a man who said he “ran cyber” at the State Department. This person, an alt-right YouTube personality who’d gone by the handle Frame Game, had been employed by the State Department for just a couple of months.

Using his brief affiliation as a marker of authority, he wrote blog posts styled as research reports contending that our project, the Election Integrity Partnership, had pushed social media networks to censor 22 million tweets. He had no firsthand evidence of any censorship, however: his number was based on a simple tally of viral election rumours that we’d counted and published in a report after the election was over. Right-wing media outlets and influencers nonetheless called it evidence of a plot to steal the election, and their followers followed suit.

«

The recipe for crap: America has perfected it. HG Wells said “we are in a race between education and catastrophe”, and it’s hard to feel that education is winning.

unique link to this extract

‘It’s impossible to play for more than 30 minutes without feeling I’m about to die’: lawn-mowing games uncut • The Guardian

Rich Pelley:

»

Recreating the act of trimming grass is nothing new. Advanced Lawnmower Simulator for the ZX Spectrum came free on a Your Sinclair magazine cover tape in 1988. Written as an April fool joke by writer Duncan MacDonald, it mocked all the Jet Bike, BMX and Grand Prix simulators by budget game house Codemasters. In spite of this, Advanced Lawnmower Simulator spawned legions of clones and fans and even its own rubbish games competition where people still, to this day, try to write the worst game possible on the ZX Spectrum.

Lawn Mowing Simulator, created by Liverpool-based studio Skyhook Games, is not an April fool joke. It strives for realism and has its own unique gaggle of fans. But why would you want to play a game about something you could easily do in real life? As a journalist, I had to know, so I decided to consult some experts.

“It’s weird that this genre not only exists, but is so popular,” explains Krist Duro, editor-in-chief of Duuro Plays, a video game reviews website based in Albania – and the first person I could find who has actually played and somewhat enjoyed Lawn Mowing Simulator. “But you need to be wired in a particular way. I like repetitive tasks because they allow me to enter into a zen-like state. But the actual simulation part needs to be good.”

Duro namechecks some other simulators I’ve thankfully never heard of: Motorcycle Mechanic 2021, Car Mechanic Simulator, Construction Simulator, Ships Simulator. “These games are huge,” says Duro. “Farming Simulator has sold 25m physical copies and has 90m downloads. PowerWash Simulator sold more than 12m on consoles. As long as the simulators remain engaging, people will show up.”

Duro reviewed the latest VR version of Lawn Mowing Simulator but wasn’t a fan. “Your brain can’t accept that you’re moving in the game while in real life you’re staying still. It made it impossible to play for more than 30 minutes without feeling like I was about to die,” he says. But otherwise, he liked it.

«

There’s a short trailer for the game on YouTube. I can see what Duro means about the dying part.

unique link to this extract

Tracking as a service: ShareThis to be profiled! • Privacy International

»

Behind their tecchie names, AddThis and ShareThis are simple services: they allow web-developers and less tech-savvy users to integrate social networking “share” buttons on their site. While they might also offer some additional services such as analytics, these tools gained traction mostly by providing an easy and free way to integrate Facebook, Twitter and other social networks share buttons.

Anyone can use any of these service and in a few clicks be provided with a plugin for their site or a few lines of code they can integrate, making it a very simple and accessible service used by millions of people.

However, behind this seemingly helpful and practical service lies a darker truth: these companies make money by tracking and profiling website visitors. By being implemented on hundreds of thousands of websites, these companies are in a unique position to track people on the web, compiling their browsing history into profiles that can then be shared, processed and sold. They are able to do so using different tracking technologies such as cookies or canvas fingerprinting (which AddThis was one of the first to develop and deploy back in 2014).

This basically allows them to give users a unique identifier so that when you visit, for example, Page A about sport and Page B about dogs the company is capable of recording you as a unique individual interested in these two topics.

«

So very predictable, but it’s always worth asking yourself before you click on these things.

unique link to this extract

Researchers prove Rabbit AI breach by sending email to us as admin • 404 Media

Jason Koebler:

»

Members of a community focused on jailbreaking and reverse engineering the Rabbit R1 AI assistant device say that Rabbit left critical API keys hardcoded and exposed in its code, which would have allowed them to see and download “all r1 responses ever given.” The API access would have allowed a hacker to use various services, including text-to-speech services and email sending services, as if they were the company. To verify their access, the researchers sent 404 Media emails from internal admin email addresses used by the Rabbit device and the Rabbit team.

The disclosure, which was made on the group’s website and in its Discord Tuesday, is the latest in a comedy of errors for the device, which, under the hood is essentially just an Android app that runs requests through a series of off-the-shelf APIs like ElevenLabs, which is a text-to-speech AI product. The device’s poor design has been the subject of many articles, investigations, and YouTube videos.

The exposed API keys were discovered by a group called Rabbitude, a community of hackers and developers who have been reverse engineering the Rabbit to explain how it works, find security problems, jailbreak the devices, and add additional features.

«

We – as in the world of tech – don’t seem to be producing many products lately that don’t have gigantic holes either in their security or their usability or their reliability. In order: this, Humane’s AI Pin, LLMs.

unique link to this extract

Garbage in, garbage out: Perplexity spreads misinformation from spammy AI blog posts • Forbes

Rashi Shrivastava:

»

even as the startup has come under fire for republishing the work of journalists without proper attribution, Forbes has learned that Perplexity is also citing as authoritative sources AI-generated blogs that contain inaccurate, out of date and sometimes contradictory information.

According to a study conducted by AI content detection platform GPTZero, Perplexity’s search engine is drawing information from and citing AI-generated posts on a wide variety of topics including travel, sports, food, technology and politics. The study determined if a source was AI-generated by running it through GPTZero’s AI detection software, which provides an estimation of how likely a piece of writing was written with AI with a 97% accuracy rate; for the study, sources were only considered AI-generated if GPTZero determined with at least 95% certainty that they were written with AI (Forbes ran them through an additional AI detection tool called DetectGPT which has a 99% accuracy rate to confirm GPTZero’s assessment).

On average, Perplexity users only need to enter three prompts before they encounter an AI-generated source, according to the study, in which over 100 prompts were tested.

“Perplexity is only as good as its sources,” GPTZero CEO Edward Tian said. “If the sources are AI hallucinations, then the output is too.”

Searches like “cultural festivals in Kyoto, Japan,” “impact of AI on the healthcare industry,” “street food must-tries in Bangkok Thailand,” and “promising young tennis players to watch,” returned answers that cited AI-generated materials.

«

Seems like Perplexity can’t catch a break. And probably doesn’t deserve to.

unique link to this extract

Studios • Toys”R”Us

»

Watch the story of a dream come true: The creation of Toys”R”Us and Geoffrey the Giraffe

«

This is a video created by video AI generator Sora, trying its little heart out to not look like an AI-generated film. As a result, it’s a complete nothing; there’s no depth of meaning, but also none of the dreamlike transitions that AI is capable of (because it has no boundaries about what’s “wrong”).

This sort of stuff is going to be all over the place quite soon, and we’re going to hate it as we should. A smart comment on eX-Twitter points out: “Toys R Us isn’t even really a company anymore because private equity destroyed it. Commercial made by no one to advertise a company that doesn’t exist featuring childhood experiences that will never happen again.”

unique link to this extract

Instagram doesn’t want you to watch the presidential debate • FWIW News

Kyle Tharp:

»

Just one day before the first general election presidential debate of 2024, social media giant Meta changed users’ settings to automatically limit the amount of political content they can see on Instagram.

Several months ago, Instagram made a widely-publicized change that required users to go to their settings and “opt-in” to seeing political content in their feeds from accounts they do not already follow. Many political creators criticized that move, and encouraged their followers to update their settings and opt-in.

Now, Instagram has gone a step further, changing all users’ settings to automatically restrict political content every time they exit the app.

It’s unclear when the company pushed this update, but it appears to have happened in the past 48 hours and was first brought to my attention by a group of large political accounts Tuesday night.

A Meta spokesman has stated “This was an error and should not have happened. We’re working on getting it fixed.”

«

Even so: a bit of a weird update to have made, and a strange mistake.

unique link to this extract

Google Slides is actually hilarious (or trolling) • Medium

Laura Javier:

»

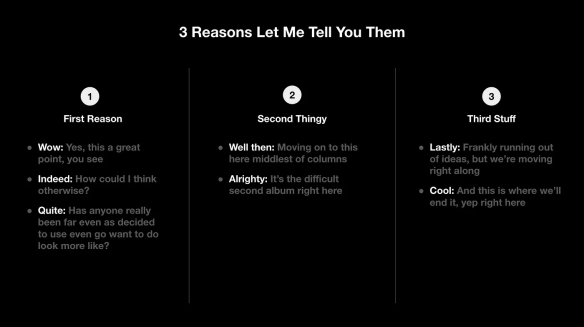

Perhaps like you, I naively started out thinking that Google Slides was just a poorly maintained product suffering from some questionable foundational decisions made ages ago that worshipped at the shrine of PowerPoint and which have never since been revisited, but now, after having had to use it so much in the past year, I believe that Google Slides is actually just trolling me.

I’m a designer, but — stay with me! I don’t think I’m being fussy. This may surprise you, but I’ve become so desperate that I don’t care about nice slide transitions or nice typography. All I really want is to make really basic slides like this…

…without wanting to jettison my laptop into the nearest trash can.Reasonable, no?!

Join me on this cathartic journey which aspires to be none of the following: constructive, systematic, exhaustive. I’m too tired for that, dear reader. Consider this a gag reel. A platter of amuse-bouches. A chocolate sampler box of nightmares.

«

And truly, it turns out that Google Slides is terrible. This piece is from a couple of years ago, but I doubt Google Slides has changed much. The problem, as with much Google stuff, is that it’s effectively abandonware after it reaches a certain point; nothing important or useful gets fixed or improved.

unique link to this extract

| • Why do social networks drive us a little mad? • Why does angry content seem to dominate what we see? • How much of a role do algorithms play in affecting what we see and do online? • What can we do about it? • Did Facebook have any inkling of what was coming in Myanmar in 2016? Read Social Warming, my latest book, and find answers – and more. |

Errata, corrigenda and ai no corrida: none notified

I look forward to the media reactions when the next Republican administration consults with social media companies about making sure that the news is “truthful”. Most especially when it will concern stopping the spread of “false, misleading and dangerous information” regarding the legitimacy of that Republican victory.

A very cynical analysis of the politics of the social media decision:

Dissent, 3 conservatives: “We’re losing, let’s stop it (short game).”

Majority, 3 liberals: “We’re winning, let’s keep it going (short game).”

Majority, 3 conservatives: “We’re losing NOW, but we’re starting to win (all hail the Great Musk Satan!). Agreed, let’s keep it going, because soon it’ll go our way (long game).”

Because the decision just gave the entire conservative bloc an uncontroversial way to shut down the inevitable lawsuits when the shoe is on the other foot: Sorry, no “standing”, we already ruled previously (the dissenters get to change sides by saying they oppose it in principle, but the law is the law).

[Disclaimer: I’m not a lawyer, just a cynic here]